I know, I haven’t been posting much lately. 5.5 upgrades got postponed due to the new storage platform needing my immediate attention and being a speaker at the Percona Live conference in April also needs a lot of attention.

One of the things I want to try out is running multiple MySQL instances on the same machine. The concept remained in the back of my mind ever since I attended Ryan Thiessen’s presentation on the MySQL conference 2011 but we never actually got a proper usecase for it. Well, with the new storage platform it would be really beneficial so an excellent use case to try it out! So what have I been busy with in the past week? That’s right: running multiple instances MySQL on one single server. 😉

Even though it is not well documented and nobody describes the process in depth it is not that complicated to get multiple instances running next to each other. However it does involve a lot of changes in the surrounding tools, scripts and monitoring. For example, this is what I changed so far:

1. MySQL startup script

Yes, you really want this baby to support multiple instances. I’ve learned my lesson with the wildgrowth of copies of the various MMM init scripts.

2. Templating of configs

If you want to maintain the instances well you should definitely start using a fixed template which includes a defaults file. In our case I created one defaults file for all instances and each and every instance will override the settings of the defaults file. Also some tuning parts are now separated from the main config.

3. Automation of adding new instances to a host

Apart from a bunch of config files, data directory you really want to have some intelligence when adding another instance. For example only the innodb_buffer_pool_size needs to be adjusted for each new instance you add.

4. Automation of removing instances from a host

Part of the step above: if you can add instances, they you need to be able to remove an instance. This should be done with care as it will be destructive. 😉

After this there is still a long extensive list of things to be taken care of:

1. Automation of replication setup

The plan is to keep things simple and have two hosts replicate all instances to each other. So the instance 3307 on host 1 will replicate to instance 3307 on host 2 (and back), instance 3308 on host 1 will replicate to instance 3308 on host 2 (and back), etc.

2. HA Monitoring needs thinking/replacement

I haven’t found a HA Monitoring tool that can handle multiple instances on one host.

Why is this a problem?

If only one of the MySQL instances needs maintenance you can’t use the current tools unless you are willing to make all other instances unavailable as well. Also what will you do when the connection pool of one instance gets exhausted? Or if one instance on both servers die?

3. Backup scripts needs some changes

Obviously our backup tools (wrapper scripts around xtrabackup) need some alteration. We are now running multiple instances, so we need to backup more than one database.

4. Cloning scripts need some changes

We have a script that can clone a live database (utilizing xtrabackup) to a new host. Apart from the fact that it assumes it needs to clone only one single database we might also go for full cloning of all instances

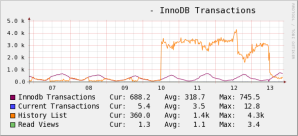

5. Monitoring needs to understand multiple instances

Our current (performance) monitoring tools, like Nagios/Cacti/etc, only assume one MySQL instance per host. At best I can implement the templates multiple times, but that also increases the number of other checks with the same factor.

And there obviously a lot more things I haven’t thought of yet. As you can see I’ll be quite occupied in the upcoming period…